NOTE:

This article is an early deep-dive into my research and extremely primitive understanding of how a simulated mind might work. I need something robust, performant, and capable of adaptation over time. This is a big ask due to my lack of understanding in the realms of neuroscience and biology. Simply jumping in for a few weeks and trying to absorb as much information as possible is not really a practical response to the problem at hand (esp. when I’m trying to do the same thing along other disciplines). Regardless, this is exactly what I’ve done (and am still doing) and eventually I’ve got to begin working or I’ll have a PhD before I’ve started. I welcome any and all feedback about where I might have gone wrong in either understanding or representing something. These are early days in my work on Nambug, and I expect my thinking and the models I use to be adaptive over time. If you have anything to offer at all, or just want to discuss what I’m doing, please contact me at my twitter @Thr33li! Onward!

The Goal

First thing’s first, a good question might be, “What is the point of all this mucking around with neuroscience?” It ends up being a fairly complex answer, but it points back to one of the core elements of Protobug. Artificial Intelligence and its role in adaptive and emergent behaviors. In the realm of game development AI is varied and in some respects a sordid term. It can be expressed in a huge number of ways:

- Cellular Rule Sets

- Finite State Machines

- Fuzzy Logic

- Biological Simulation

- Planner Systems

- Neural Networks

This is a gross oversimplification of the multitudinous AI systems you might find utilized in any given game, but let’s stick with it for now. A big part of what I would like to see reflected in the ‘Bug series is emergent behavior. So, for the initial iterations of Nambug I tried out various blends of the first half of the list. Unhappy with the results, I discovered the actual problem was my definition of emergent behavior.

One definition for emergent behavior is nicely depicted in The Legend of Zelda: Breath of the Wild. That is to say, a bunch of discrete systems intersect and generate more complex results than any individual system could on its own. Other examples include Caves of Qud and the de facto king of emergent behviors, Dwarf Fortress. Emergent behavior (sometimes called emergent gameplay) is at least easy to understand -> just make granular micro-systems rather than domain-wide rules, and then allow the systems and the player to freely interact.

Workable, yes, but as I got further along in development, I became less satisfied with this methodology. While I could be surprised by certain interactions of AI elements (especially along increasing numbers of unique systems), it always seemed easy to sense the underlying rules. This could just be a product of my approach, but it led to a feeling that everything possessed a repetitious nature.

The other style of emergent behavior is more closely associated with AI research, especially in the case of generational reinforcement learning. This too has its limits. A gross oversimplification might be: Complexity at the expense of additional input (memory, power, time, etc.) and a lack of generalized robustness. Very good at producing results in precisely specified tasks. This doesn’t account for so-called one shot learning, unsupervised online learning, and critically, any more generalized adaptation. Side Note: I know these things have been addressed in different models, but I haven’t seen them used in the realm of ALife (other than maybe in The Bibites, which you should check out!) The point of all this -> Looking at what exists helped me to realize my final (AI) goal:

Design an AI that roughly approximates the generalized capabilities of a singular, intelligent biological entity such that it be robust enough to adapt over time and performant enough to allow for many such entities to interact at once.

This reads like some sort of holy grail – but it is one of my many goals for Nambug. I think it’s worth at least shooting for.

Thinking Through The Impossible

What I’m asking for points to an Artificial Neural Network of some sort. The real problem is that most ANNs / FCNNs mostly focus on excelling at singular tasks. While they do so, and I could conceivably place a few such systems side by side (or hierarchically, as in the case of meta-AI), I would prefer a consolidated single system. I’m certainly not the first to think in this manner.

I’m interested in what sorts of behaviors might be able to emerge organically from a single integrated system, but the more I think about this, it sure sounds like I’m trying to design a brain. This leads me to the core questions:

- How should I model a brain?

- How should I model this brain’s adaptation over time?

- How can I store individual memories, and how would they be recalled?

- How do I define consciousness to then make a facsimile of it?

It should be noted that I’m not a neuroscientist, and I have no formal background in either biology or neuroscience. I am acknowledging I’ve waded through research haphazardly, and that what I present here might have glaring errors. I welcome feedback.

While the first question seems like the easiest (haha) to answer, my research seems to suggest that I should start with the last question and work my way up.

Defining Consciousness

Consciousness is something we’ve struggled with for a great many years, and even the etymology is hotly debated. If the definition is as elusive as the idea of a ‘subjective experience‘, then where should I even start? I chose to start with recent ideas in the realm of Neuroscience. In my search for more concrete ideas, I found that the some research indicate that when it comes to the brain, there is only the subjective. The brain has no direct connection to the world, and so works by way of constantly building and updating predictive models. More critically, it builds these models as a means of shortcutting, and uses our sensory systems as sort of error correction mechanism. (I won’t go into everything I discovered here: A. to save some stuff for future posts and, B. I would like for you to check out some of the links below!)

So if one current, popular idea of consciousness is as a confluence of shortcuts and predictions about the world, then to generate something similar I need to write a model that can make nested models (and predictions) about its world. Of course, this needs to happen in a manner that considers performance. Take my previous concern about pathfinding. What I would like to have happen is not that an actor simply stores the route in some binary memory. Rather, I’m hoping to make a system where some desire stimulates a need to plan a path. Following that, a grouping of associations should be formed (via a firing of specific neurons) about what the actor remembers about a potential path. This is two steps removed from the cheating I complained about it my previous post. Not only does the actor not know the path directly, but it also isn’t simply storing a array of arbitrary room data. I’m not going to bog down this post with specifics, but I am very close to nailing how I will attempt this. The hope is my method will nestle somewhere between efficiency and flexibility. Obviously, I don’t think I’ll be making actually conscious life, but I would like it to learn, adapt, grow, and get things wrong.

Memory And Storage

So how will I make characters that can interact with their world, remember the past, and more importantly generate these predictive models? Once again I turn to the realm of neuroscience. Our collective understanding of memory is slippery and appears to be constantly evolving. The most interesting theories might sound familiar: memory is not actually the process of recalling data-like recordings. Instead, memory is a reconstruction of associations. If I produce a system that can construct models of its understanding of the world, then the only real difference here is that these models be associatively indexed.

One lingering problem I have yet to solve is how to chain these indexes temporally to form episodic memories. It might be as simple as forming associations with conceptual neurons, and aligning each with discrete amounts of time. Another issue with this system is structurally, it seems to require an ever increasing number of neurons (or synaptic connections) to offer any useful granularity about the world. I will continue to poke various sources until something surfaces. My next post in this series will deal more closely with the concepts of memory and storage in Nambug, so I’ll leave it alone for now.

A Mental Model and Neuroplasticity

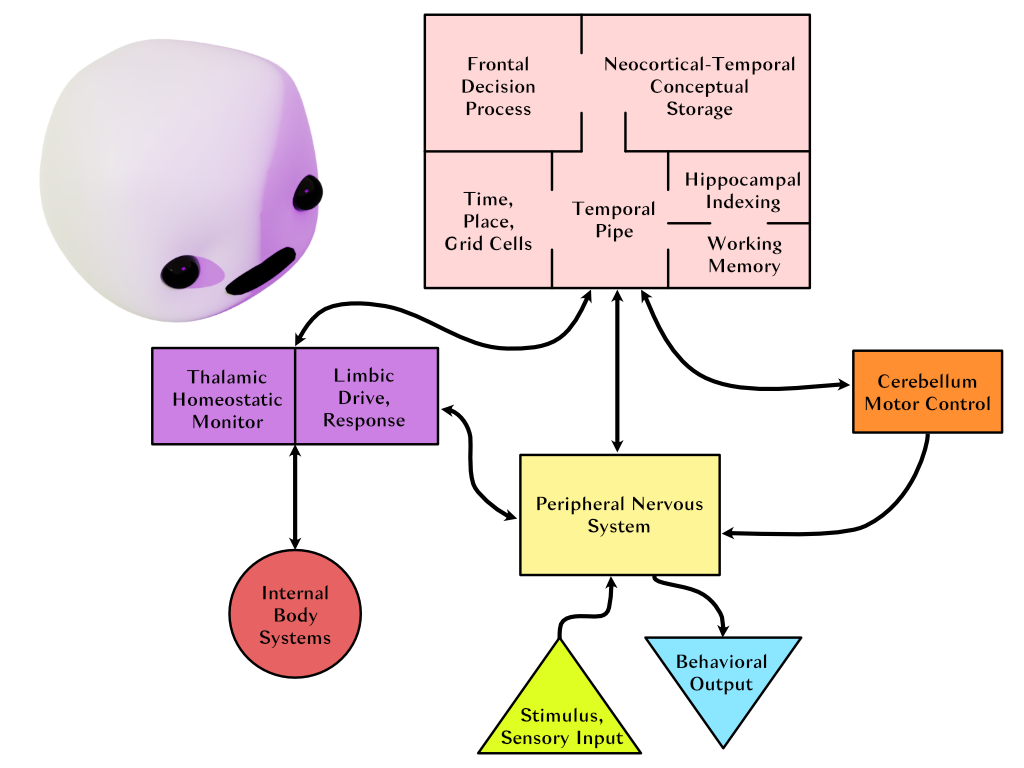

Finally, let’s look at how I might model and adapt the generalized Nambug brain. If I understand the topic correctly, we as humans are born with all the 87 billion neurons (give or take a few billion) we’ll ever have. This puts a lot of weight on the potentiation and depression of synaptic connections. The general idea is pretty straightforward: positive experiences or goal retrieval strengthen useful connections and the opposite is true. There will be some hard limitation on how strong a particular synaptic connection can be, so no one connection can ever dominate. This still leaves out learning over the long term (for situations like navigation where the reward is greatly delayed as compared to when the plan was constructed.) I have already started to think through a model of a ‘Bug brain as a means of grouping like neurons:

The Next Steps

This simple model does not take into account firing rates, neuronal neighbors, lateral connections, or how to handle auto-association. It also does not begin to suggest how much complexity might be available via this particular set of simplified linkages. I’m positive this model will evolve over time.

Please stay tuned as I start to implement and test these various ideas. As I’ve stated above, I’m already working through these ideas as well as problems that have surfaced. If you have any thoughts at all, let me know! Thank you for reading.

CITATIONS:

These should all be either direct links or embedded videos, though sometimes the embedding fails, in which case you should just be able to paste the link. If you have any questions about these or any other sources please contact me at my twitter @Thr33li:

Consciousness

Memory

Seriously, please listen to this lecture. It’s fascinating. A huge amount of my modeling with end up being based up on her work.

Computational Vignettes

Physical Structures